AI is catching on in the Cloud Native Ecosystem

Artificial Intelligence looks different with Kubernetes

As Head of Ecosystem at CNCF, I have an unique vantage point to observe our industry's evolution. This newsletter focuses on what’s top of mind for me and today its the convergence between traditional infrastructure and application development. I am catching up to what’s going on in the cloud native ecosystem and this is my attempt towards do that out loud.

Bridging Two Worlds: Reflections from Kubecon

I attended Kubecon this week in my new role at the CNCF. I spent a lot of time answering the same question repeatedly: "What trends are you seeing in the Cloud Native space?"

The easy answer is AI, but I appreciate that this community is skeptical about using AI as a simple marketing answer is not acceptable. My perspective is somewhat unique. Having straddled both platform and front-end engineering throughout my career, I can see how these traditionally separate domains are converging in fascinating ways.

The Changing Landscape of Tools

Great engineers excel with or without AI because they understand when and where to leverage available resources.

In front-end development, we're witnessing this new era of "vibe coding." I spent countless hours last weekend building prototypes using prompts and vibes. By Sunday evening, I had three different ideas built from bolt.new and live on a production URL powered by Netlify (e.g., pull2press.com - app to generate blog posts from pull requests).

That experience was cool, but I don't see a path for vibe coding cloud-native infrastructure with that same seamless experience. The cloud native community is focused on powering the infrastructure that powers the next wave of serious AI infrastructure.

What's does AI mean for Platform Developers?

The real excitement centers around managing GPU workloads and orchestrating multi-cluster, AI-ready environments. Developers are envisioning how cloud-native technologies can support and scale AI infrastructure. I don't anticipate entire infrastructure being generated with code, but I do see a future where existing platform engineers start enabling their ecosystems with LLMs and GPU-powered inference.

But first, we need to figure out the basics for building air-gapped MCP and LLM solutions in multi-cluster workflows. There are too many enterprise engineers with a distrust of tools they can't control. The good news is we know we will get there this year.

I'm placing all my bets on this AI-enabled cloud-native era—and from the conversations I'm having, I'm far from alone.

Demystifying Multi-Cluster AI-Ready Environments

When I talk about "multi-cluster AI-ready environments," I'm referring to Kubernetes infrastructure specifically designed to handle the unique demands of AI workloads across multiple clusters. But what does this actually mean in practice?

At its core, these environments solve several critical challenges:

Resource Orchestration: AI workloads, particularly training jobs, devour resources. Multi-cluster environments intelligently distribute these workloads across available hardware resources, ensuring GPUs don't sit idle in one cluster while another struggles under heavy load.

Specialized Hardware Management: Unlike traditional applications, AI workloads often require specialized accelerators (GPUs, TPUs, etc.). These environments provide the tooling to efficiently schedule and utilize this expensive hardware.

Data Gravity Solutions: AI models need access to massive datasets. Multi-cluster environments help solve the "data gravity" problem by orchestrating workloads closer to where data resides, reducing latency and transfer costs.

Scaling Flexibility: The ability to burst training or inference workloads across clusters during peak demands while maintaining cost efficiency during quieter periods.

I'm seeing platform teams struggle with these challenges firsthand. Many are cobbling together solutions with existing tools, but purpose-built systems for AI workloads are rapidly emerging in the ecosystem. This isn't just about running a model—it's about creating the infrastructure foundation that makes enterprise AI practical and sustainable.

Reads

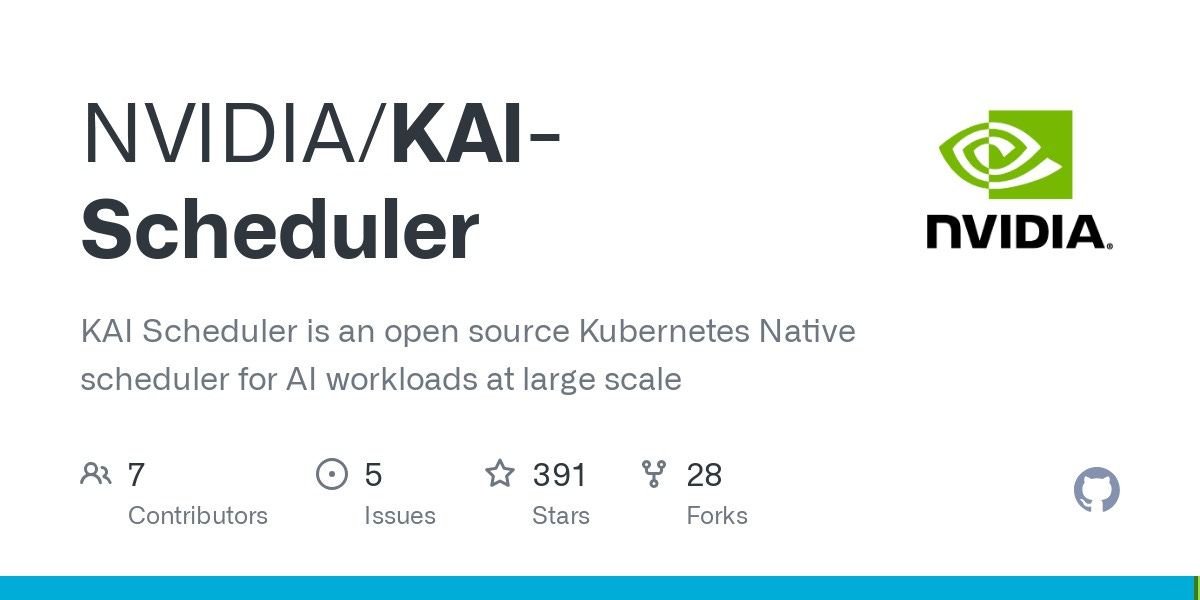

NVIDIA's AI Scheduling Solution

https://github.com/NVIDIA/KAI-Scheduler

NVIDIA's Kubernetes AI scheduler optimizes GPU resource allocation for ML workloads. Designed for efficient orchestration of AI training and inference jobs, it intelligently manages compute resources across clusters.

This is one of the first of many launches we will see from the acquired (November 2024) run:ai team.

MCP for AI Infrastructure

Insightful post on building multi-cluster platforms for GenAI workloads. Discusses air-gapped solutions, enterprise requirements, and the challenges of scaling AI infrastructure in regulated environments.

Go Team Building Official MCP SDK

Google's Go team is partnering with Anthropic to develop an official Go SDK for Model Composition Protocol (MCP), bringing native support for advanced AI capabilities to Go developers.

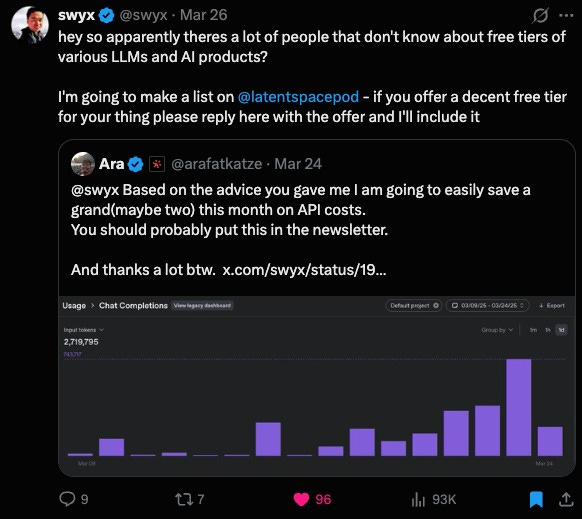

Swyx on AI Coding Evolution

Thread exploring how AI coding tools are evolving beyond simple code completion to more sophisticated capabilities, highlighting the transition from autocomplete to autonomous agents that can understand and implement complex development tasks.

The real value was finding out how to get 10 million tokens from OpenAI by sharing your data with them. Use at your risk.

Bridging Theory and Practice in Platform Engineering

The conversations I've been having with platform teams reveal a widening gap between theoretical discussions about AI infrastructure and the practical realities of implementation. Many organizations are still grappling with the fundamentals:

How to implement effective GPU quota management across teams

Creating sensible defaults for resource requests/limits for AI workloads

Building robust monitoring and observability for model training jobs

Implementing cost controls that don't hamper innovation

What's fascinating is that these challenges mirror the early days of containerization. Just as we witnessed with Kubernetes adoption, there's a clear maturity curve where organizations progress from "making it work" to "making it scale" to "making it efficient."

Community Spotlight: Emerging Solutions

I'm particularly impressed with several community-driven initiatives tackling these challenges:

KubeRay - Ray's integration with Kubernetes is becoming a cornerstone for distributed AI training.

Kueue - The CNCF incubating project providing job queuing for batch workloads shows significant promise for AI job scheduling.

Volcano - High-performance scheduling for ML workloads with robust gang scheduling capabilities.

These projects demonstrate how the cloud-native community adapts existing patterns to new workloads rather than reinventing everything from scratch—a pragmatic approach that accelerates AI infrastructure development.

What's Next on My Radar

In the coming weeks, I'll be diving deeper into:

Air-gapped LLM deployment patterns for regulated industries

The emerging "platform as product" approach to AI infrastructure

How teams are handling model versioning and deployment pipelines effectively

Let's Continue the Conversation

Have thoughts on multi-cluster AI environments or platform engineering challenges you’re facing? I’d love to hear from you! Reply to this newsletter, or catch me on socials at b.dougie.dev. Your real-world experiences help inform these discussions and shape future newsletters. Let’s learn from each other!

Great post. Thanks for sharing your insights, Brian!